- Python auto tune software how to#

- Python auto tune software code#

- Python auto tune software download#

On the other hand, “hyperparameters” are normally set by a human designer or tuned via algorithmic approaches. In the context of Linear Regression, Logistic Regression, and Support Vector Machines, we would think of parameters as the weight vector coefficients found by the learning algorithm. Well, a standard “model parameter” is normally an internal variable that is optimized in some fashion. So what’s the difference between a normal “model parameter” and a “hyperparameter”? The process of tuning hyperparameters is more formally called hyperparameter optimization. Hyperparameters are simply the knobs and levels you pull and turn when building a machine learning classifier.

Python auto tune software how to#

We’ll then explore how to tune k-NN hyperparameters using two search methods: Grid Search and Randomized Search.Īs our results will demonstrate, we can improve our classification accuracy from 57.58% to over 64%! What are hyperparameters? We’ll start with a discussion on what hyperparameters are, followed by viewing a concrete example on tuning k-NN hyperparameters.

In the remainder of today’s tutorial, I’ll be demonstrating how to tune k-NN hyperparameters for the Dogs vs.

Python auto tune software code#

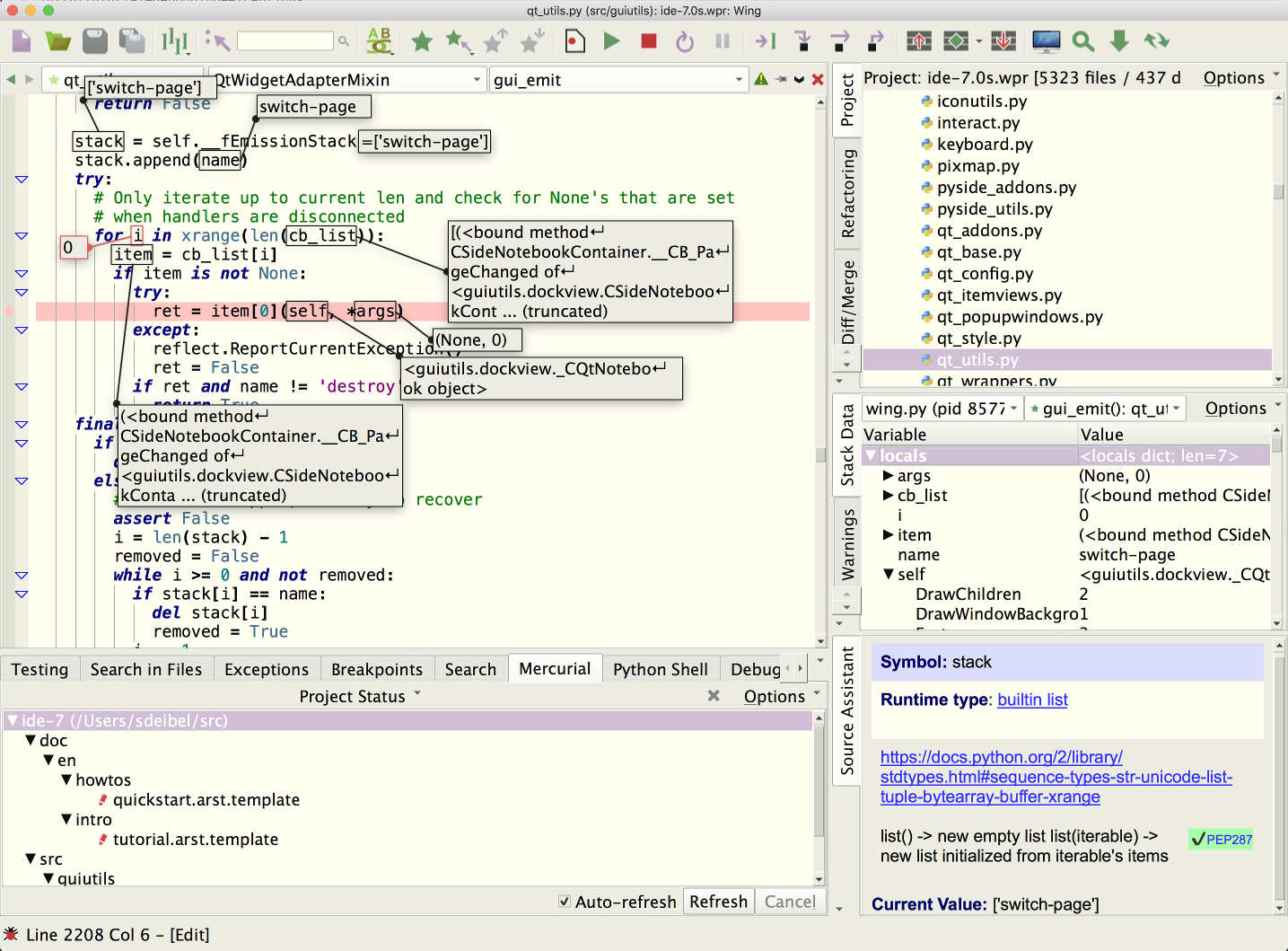

Looking for the source code to this post? Jump Right To The Downloads Section How to tune hyperparameters with Python and scikit-learn In the context of Deep Learning and Convolutional Neural Networks, we can easily have hundreds of various hyperparameters to tune and play with (although in practice we try to limit the number of variables to tune to a small handful), each affecting our overall classification to some (potentially unknown) degree.īecause of this, it’s important to understand the concept of hyperparameter tuning and how your choice in hyperparameters can dramatically impact your classification accuracy. Of course, hyperparameter tuning has implications outside of the k-NN algorithm as well. We can also tune our distance metric/similarity function as well. In the case of k-NN, we can tune k, the number of nearest neighbors. Of course we can! Obtaining higher accuracy for nearly any machine learning algorithm boils down to tweaking various knobs and levels. Cats dataset challenge: Figure 1: Classifying an image as whether it contains a dog or a cat. Using the k-NN algorithm, we obtained 57.58% classification accuracy on the Kaggle Dogs vs. In last week’s post, I introduced the k-NN machine learning algorithm which we then applied to the task of image classification.

Python auto tune software download#

Click here to download the source code to this post

0 kommentar(er)

0 kommentar(er)